You may remember my posts on the latest VNX OE code that brought with it some highly publicized enhancements, but I wanted to take this time to speak about some of the lesser known enhancements. In this post, I’m going to talk about provisioning BLOCK luns for the FILE side.

You may remember my posts on the latest VNX OE code that brought with it some highly publicized enhancements, but I wanted to take this time to speak about some of the lesser known enhancements. In this post, I’m going to talk about provisioning BLOCK luns for the FILE side.

Historically, from DART 5.x, 6.x, and even VNX OE for FILE 7.0, if you wanted to provision luns to the FILE side, they had to be part of a raid group. This meant that you couldn’t take advantage of any of the major block enhancements like Tiering and FAST VP. Well starting with VNX OE for FILE 7.1, you create luns from your pool and provision them to the FILE front end.

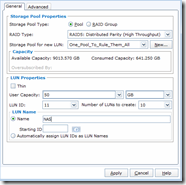

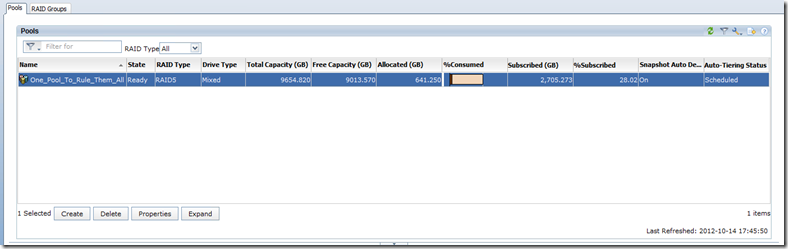

For those of you who are not familiar with this process, let me walk you through it. We’ll start with a pool. In this example, I created 1 large pool made up of FLASH, SAS, & NL-SAS drives.

Now I will create some luns. When creating luns for FILE, it is best to create them in sets of 10 and provision them as thick luns. You can always do thin filesystems on the FILE side later. In this example, I want to make sure to set the default owner to auto so that I get an even number of luns on SPA & SPB. And of course, to take advantage of the new tiering policy, I have that set to “High then auto-tier”.

Now I will create some luns. When creating luns for FILE, it is best to create them in sets of 10 and provision them as thick luns. You can always do thin filesystems on the FILE side later. In this example, I want to make sure to set the default owner to auto so that I get an even number of luns on SPA & SPB. And of course, to take advantage of the new tiering policy, I have that set to “High then auto-tier”.

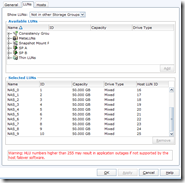

When this finishes, you’ll get 10 luns split between SPA & SPB and we are ready to assign them to a host. The storage group for VNX FILE is called “~filestorage”. Make sure when adding your luns to this storage group, that you start with Host LUN ID of 16 or greater. If you set it to anything less, it will not be detected on the rescan. Speaking of rescan, once you have assigned the luns, select the “Rescan Storage Systems” on the right hand side of the Storage section of Unisphere. Alternatively, you can also run “server_devconfig server_2 –create –scsi –all” to rescan for disks. You will then need to rerun the command for your other datamovers as well.

When this finishes, you’ll get 10 luns split between SPA & SPB and we are ready to assign them to a host. The storage group for VNX FILE is called “~filestorage”. Make sure when adding your luns to this storage group, that you start with Host LUN ID of 16 or greater. If you set it to anything less, it will not be detected on the rescan. Speaking of rescan, once you have assigned the luns, select the “Rescan Storage Systems” on the right hand side of the Storage section of Unisphere. Alternatively, you can also run “server_devconfig server_2 –create –scsi –all” to rescan for disks. You will then need to rerun the command for your other datamovers as well.

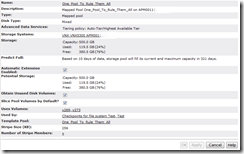

Now that we have our new luns scanned into VNX FILE side of things, lets go see what we have. You will notice that the new FILE pool shares the same name as the BLOCK pool, the drive types are “mixed”, and the tiering policy is specified under advanced services. That’s pretty much all there is to it. At this point you would go ahead and provision file systems as normal.

Now that we have our new luns scanned into VNX FILE side of things, lets go see what we have. You will notice that the new FILE pool shares the same name as the BLOCK pool, the drive types are “mixed”, and the tiering policy is specified under advanced services. That’s pretty much all there is to it. At this point you would go ahead and provision file systems as normal.

I hope you have enjoyed this look at a new enhancement cooked into the latest VNX code. Expect more posts on this as I continue the series. As always, I love to receive feedback, so feel free to leave a comment below.

Twitter

Twitter LinkedIn

LinkedIn RSS

RSS Youtube

Youtube Picasa

Picasa Email

Email