With cold & flu season fast approaching, it seems that people are worried just as much about their array health as they are about their personal health. Working in the EMC Unified Storage Remote Support Lab, I see at least 10 new requests each day for a health check on an array and most of the time, there is nothing wrong. Today I’m going to show you some easy ways to see if there really is a problem or not. While the VNX array is sold as Block, File, or Unified (a combination of both) the health checks are different for the Block side and the File side. We’ll start with the File side.

With cold & flu season fast approaching, it seems that people are worried just as much about their array health as they are about their personal health. Working in the EMC Unified Storage Remote Support Lab, I see at least 10 new requests each day for a health check on an array and most of the time, there is nothing wrong. Today I’m going to show you some easy ways to see if there really is a problem or not. While the VNX array is sold as Block, File, or Unified (a combination of both) the health checks are different for the Block side and the File side. We’ll start with the File side.

Health Check of VNX FILE

There are two ways of doing a health check on the VNX FILE. The first method I will demonstrate is the traditional way and is executed via the command line. This method has been in place since the older celerra models and works the same way on them as well. To kick off your health check, simply login to the control station using an SSH client and run the command “nas_checkup”. This process will take several minutes, so go get yourself a coffee and wait until you see something like the output below:

[nasadmin@VNX nasadmin]$ nas_checkup

Check Version: 7.1.47.5

Check Command: /nas/bin/nas_checkup

Check Log : /nas/log/checkup-run.120826-220724.log

————————————-Checks————————————-

Control Station: Checking statistics groups database………………….. Pass

Control Station: Checking if file system usage is under limit………….. Pass

Control Station: Checking if NAS Storage API is installed correctly…….. Pass

Control Station: Checking if NAS Storage APIs match…………………… N/A

Control Station: Checking if NBS clients are started………………….. Pass

Control Station: Checking if NBS configuration exists…………………. Pass

Control Station: Checking if NBS devices are accessible……………….. Pass

Control Station: Checking if NBS service is started…………………… Pass

Control Station: Checking if PXE service is stopped…………………… Pass

Control Station: Checking if standby is up…………………………… N/A

Control Station: Checking integrity of NASDB…………………………. Pass

Control Station: Checking if primary is active……………………….. Pass

Control Station: Checking all callhome files delivered………………… Pass

Control Station: Checking resolv conf……………………………….. Pass

Control Station: Checking if NAS partitions are mounted……………….. Pass

Control Station: Checking ipmi connection……………………………. Pass

Control Station: Checking nas site eventlog configuration……………… Pass

Control Station: Checking nas sys mcd configuration…………………… Pass

Control Station: Checking nas sys eventlog configuration………………. Pass

Control Station: Checking logical volume status………………………. Pass

Control Station: Checking valid nasdb backup files……………………. Pass

Control Station: Checking root disk reserved region…………………… Pass

Control Station: Checking if RDF configuration is valid……………….. N/A

Control Station: Checking if fstab contains duplicate entries………….. Pass

Control Station: Checking if sufficient swap memory available………….. Pass

Control Station: Checking for IP and subnet configuration……………… Pass

Control Station: Checking auto transfer status……………………….. Pass

Control Station: Checking for invalid entries in etc hosts…………….. Pass

Control Station: Checking for correct filesystem mount options…………. Pass

Control Station: Checking the hard drive in the control station………… Pass

Control Station: Checking if Symapi data is present…………………… Pass

Control Station: Checking if Symapi is synced with Storage System………. Pass

Blades : Checking boot files………………………………… Pass

Blades : Checking if primary is active……………………….. Pass

Blades : Checking if root filesystem is too large……………… Pass

Blades : Checking if root filesystem has enough free space……… Pass

Blades : Checking network connectivity……………………….. Pass

Blades : Checking status……………………………………. Pass

Blades : Checking dart release compatibility………………….. Pass

Blades : Checking dart version compatibility………………….. Pass

Blades : Checking server name……………………………….. Pass

Blades : Checking unique id…………………………………. Pass

Blades : Checking CIFS file server configuration………………. Pass

Blades : Checking domain controller connectivity and configuration. Warn

Blades : Checking DNS connectivity and configuration…………… Pass

Blades : Checking connectivity to WINS servers………………… Pass

Blades : Checking I18N mode and unicode translation tables……… Pass

Blades : Checking connectivity to NTP servers…………………. Warn

Blades : Checking connectivity to NIS servers…………………. Pass

Blades : Checking virus checker server configuration…………… Pass

Blades : Checking if workpart is OK………………………….. Pass

Blades : Checking if free full dump is available………………. Pass

Blades : Checking if each primary Blade has standby……………. Pass

Blades : Checking if Blade parameters use EMC default values……. Info

Blades : Checking VDM root filesystem space usage……………… N/A

Blades : Checking if file system usage is under limit………….. Pass

Blades : Checking slic signature…………………………….. Pass

Storage System : Checking disk emulation type………………………… Pass

Storage System : Checking disk high availability access……………….. Pass

Storage System : Checking disks read cache enabled……………………. Pass

Storage System : Checking disks and storage processors write cache enabled. Pass

Storage System : Checking if FLARE is committed………………………. Pass

Storage System : Checking if FLARE is supported………………………. Pass

Storage System : Checking array model……………………………….. Pass

Storage System : Checking if microcode is supported…………………… N/A

Storage System : Checking no disks or storage processors are failed over… Pass

Storage System : Checking that no disks or storage processors are faulted.. Pass

Storage System : Checking that no hot spares are in use……………….. Pass

Storage System : Checking that no hot spares are rebuilding……………. Pass

Storage System : Checking minimum control lun size……………………. Pass

Storage System : Checking maximum control lun size……………………. N/A

Storage System : Checking maximum lun address limit…………………… Pass

Storage System : Checking system lun configuration……………………. Pass

Storage System : Checking if storage processors are read cache enabled….. Pass

Storage System : Checking if auto assign are disabled for all luns……… N/A

Storage System : Checking if auto trespass are disabled for all luns……. N/A

Storage System : Checking storage processor connectivity………………. Pass

Storage System : Checking control lun ownership………………………. N/A

Storage System : Checking if Fibre Channel zone checker is set up………. N/A

Storage System : Checking if Fibre Channel zoning is OK……………….. N/A

Storage System : Checking if proxy arp is setup………………………. Pass

Storage System : Checking if Product Serial Number is Correct………….. Pass

Storage System : Checking SPA SPB communication………………………. Pass

Storage System : Checking if secure communications is enabled………….. Pass

Storage System : Checking if backend has mixed disk types……………… Pass

Storage System : Checking for file and block enabler………………….. Pass

Storage System : Checking if nas storage command generates discrepancies… Pass

Storage System : Checking if Repset and CG configuration are consistent…. Pass

Storage System : Checking block operating environment…………………. Pass

Storage System : Checking thin pool usage……………………………. N/A

Storage System : Checking for domain and federations health on VNX……… Pass

——————————————————————————–

As you can see, just about everything came out as pass or not available (which is fine) except for one or two things. If you have warnings or errors, you will see a more detailed output below. I have included an example here:

One or more warnings have occurred. It is recommended that you follow the

instructions provided to correct the problem then try again.

———————————–Information———————————-

Blades : Check if Blade parameters use EMC default values

Information HC_DM_27390050398: The following parameters do not use the

EMC default values:

* Mover_name Facility_name Parameter_name Current_value Default_value

* server_2 cifs acl.extacl 0x00000003 0x00000000

* server_2 cifs acl.useUnixGid 0x00000001 0x00000000

* server_2 cifs djEnforceDhn 0x00000000 0x00000001

* server_2 cifs useUnixGid 0x00000001 0x00000000

* server_2 quota policy ‘filesize’ ‘blocks’

* server_2 shadow followabsolutpath 0x00000001 0x00000000

* server_2 shadow followdotdot 0x00000001 0x00000000

* server_2 tcp fastRTO 0x00000001 0x00000000

* This check is for your information only. It is OK to use parameter

values other than the EMC default values.

* EMC provides guidelines for setting parameter values in the “Celerra

Network Server Parameters Guide” (P/N 300-002-691) that can be found

on http://powerlink.emc.com/

* If you need to change the parameter back to the default, run the

following command: “/nas/bin/server_param <mover_name> -facility

<facility_name> -modify <parameter_name> -value <default_value>”

* To display the current value and default value of a parameter, run

the following command: “/nas/bin/server_param <mover_name> -facility

<facility_name> -info <parameter_name>”

——————————————————————————–

————————————Warnings————————————

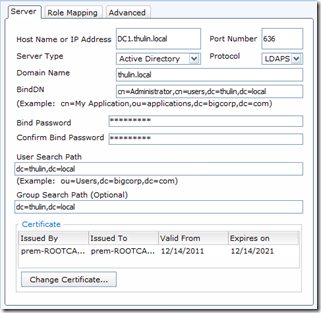

Blades : Check domain controller connectivity and configuration

Warning HC_DM_18800115743:

* server_2: PingDC failure: The compname ‘cifs01’ could not

successfully contact the DC ‘DC2K8X32’. Failed to access the pipe

NETLOGON at step Open NETLOGON Secure Channel: DC

connected:Access denied

Action : Check domain or Domain Controller access policies. For

NetBIOS servers, ensure that ‘allow pre-Windows 2000 computers to use

this account’ checkbox is selected when joining the server to the

Windows 2000 domain.

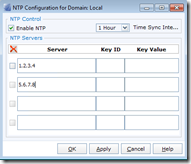

Blades : Check connectivity to NTP servers

Warning HC_DM_18800115743:

* server_2: The NTP server ‘1.2.3.4’ is online but does not

respond to any NTP query. As a consequence, the clock of the Data

Mover may be incorrect. This may cause potential failures when CIFS

clients log in. (Kerberos authentication relies on time

synchronization between the servers and the KDCs).

Action : Check the IP address of the NTP server, using the server_date

command. Make sure the NTP service is running on the remote server.

——————————————————————————–

Health checks may trigger the following responses besides Pass or N/A: Info, Warning, & Error. Info is just informational. In the example above, it was telling me about all the parameters that have been changed from the default (most likely on purpose too). Warnings again are not much to worry about either. They are mostly there to let you know of potential issues or that you might not be following best practice. These kind of messages indicate that you may have a problem down the road if things get worse, but do not indicate a direct impact at this time. Finally the most severe is Error. This means there is a problem and you should address it right away. All of these come with some basic instructions on how to resolve the problem (or at least where to look) and I would only recommend opening a support ticket if you are getting Errors and cannot solve them on your own.

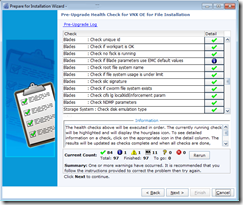

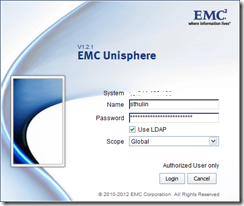

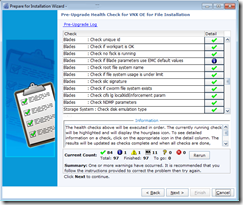

Another way to run a health check on VNX FILE is through the pre-upgrade wizard. Start by launching USM and then following the prompts to launch the “Prepare for Installation” task.

Another way to run a health check on VNX FILE is through the pre-upgrade wizard. Start by launching USM and then following the prompts to launch the “Prepare for Installation” task.

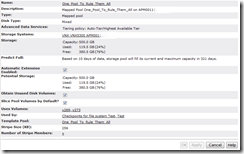

Once this has started, it will kick off a health check making sure everything is ok.

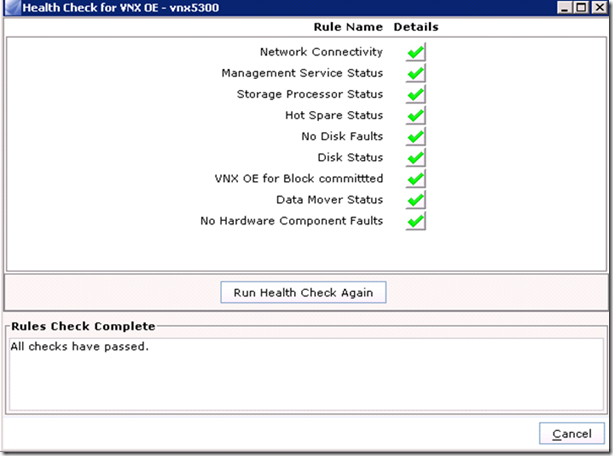

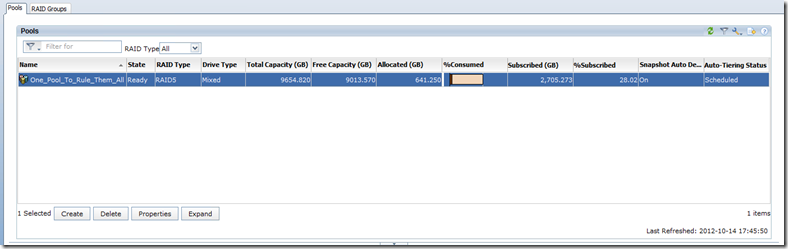

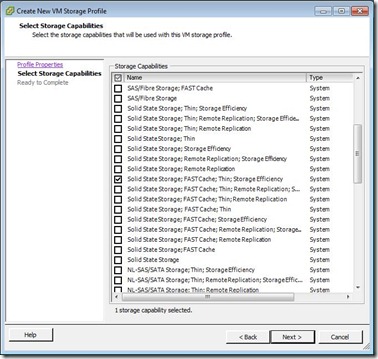

Health Check of VNX Block

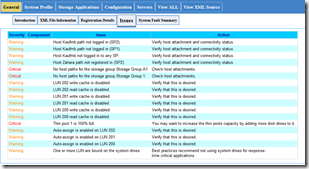

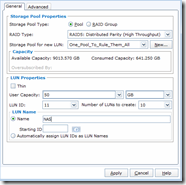

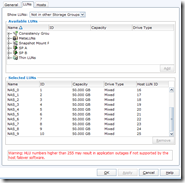

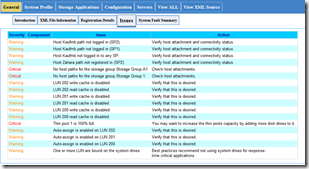

This health check is also done is USM and can be found under the “Diagnostic” section. Simply click on “Verify Storage System” to start doing a back end health report. Once the wizard starts, it will gather information about the array, and then generate an XML file for you to review.

This health check is also done is USM and can be found under the “Diagnostic” section. Simply click on “Verify Storage System” to start doing a back end health report. Once the wizard starts, it will gather information about the array, and then generate an XML file for you to review.

The check will go over events from dating back to the begining of the logs and will display any faults found. Keep that in mind because if you had a problem several days ago, but don’t right now, it will still tell you that there is a fault. If issues are found, click “Display Issue Report” to see the XML file and then click on the “Issues” tab in the webpage.

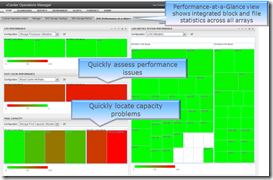

As you can see from the results, I have some warnings and some critical errors. Just like I said before, warnings are just make sure you know you that something might be up, but not making an impact yet. As you can see most of them are because this is a lab box and not all my hosts are logged in, or I’m missing some write cache. The critical alerts is what you should be concerned about and if you have trouble resolving the issue, open a support ticket to have it inspected.

These are some great ways to see if there really is a problem going on with your system and feel free to let me know if you have any questions about them.

Twitter

Twitter LinkedIn

LinkedIn RSS

RSS Youtube

Youtube Picasa

Picasa Email

Email